Nvidia (NASDAQ:NVDA) has kicked off fiscal 2024 with an impressive beat and raise and outperformed market expectations by wide margins. Although the stock already trades at an outsized premium, it does not diminish the appeal and durability of the underlying bullish thesis attributable to Nvidia’s role as the backbone of key next-generation technologies remains intact.

As discussed in a previous coverage, generative AI is also expected to further cloud TAM expansion due to the extensive compute power requirements to support the ongoing development, training and deployment of large language models and ensuing applications. The advent of generative AI technologies have brought to the forefront the importance of Nvidia’s role in the development of AI and accelerated computing solutions – spanning both hardware and full-stack software – underscoring a multi-year and multi-trillion-dollar upgrade cycle that is still in the early innings of the adoption curve.

While previous optimism on disruptive technology trends counting electric and autonomous mobility, web3, and the metaverse have since faded due to cost, regulatory and implementation challenges, the latest momentum in generative AI and ensuing market confidence have been resilient despite the volatile market climate blighted by mounting macroeconomic uncertainties. The following analysis will dive into considerations that support generative AI’s role as the “iPhone moment for AI”, and gauge Nvidia’s role in capturing related growth opportunities ahead.

The Differentiating Factor for Generative AI

Using ChatGPT has been easy and straightforward. And the integration of said technology into day-to-day settings spanning work and leisure has transitioned from novel to mainstay in a matter of months, despite little understanding among mainstream users about the technologies and buzzwords (e.g. LLMs, generative AI, foundation models, finetuning, etc.) behind it. This differs drastically from other disruptive tech trends observed over the past several years, including web3 and blockchain solutions that few can grasp, and metaverse experiences that have yet to convince mainstream adoption.

Huang’s allusion to the advent of generative AI as an “iPhone moment” can be corroborated by market expectations for the budding subfield in becoming the “fourth major capacity” to further technology’s “capabilities on top of the previous three in aggregate: internet, SaaS/Cloud, and mobility”. Specifically, the advent of the internet and ensuing evolution with wireless connectivity infrastructure has been critical in enabling the cloud-computing solutions through hyperscalers, which had inadvertently driven the deployment of cloud-based software / SaaS business models that transformed legacy on-premise IT environments. The internet, cloud, and software have subsequently collectively ushered the development and mainstream adoption of smartphones – particularly Apple’s iPhone (AAPL) – by enabling application- and software-based computing at the palm of the hand.

And the recent momentum in the widespread implementation of generative AI solutions into key existing “technology verticals” (e.g. low- / no-code software development; recommendation systems; digital advertising; cloud-based productivity; digital content creation; etc.) has unlocked a new roadmap for digital transformation, akin to wireless connectivity, cloud, and mobility. Existing tech participants built on the preceding three major transformative capacities – such as hyperscalers Google (GOOG / GOOGL), Microsoft (MSFT) and Amazon (AMZN), technology device and peripherals giant Apple, and mission-critical enabler Nvidia – are bound to benefit from an outsized first-mover advantage in the deployment of future transformative capacities too, including generative AI, by leveraging their dominant market shares and “competitive moats” in their respective fields of expertise.

Where Nvidia Fits In

And time and again, Nvidia has been ready to serve and usher the adoption of transformative changes, exhibiting strength in maintaining its leadership and competitive moat. As discussed by Jensen during Nvidia’s latest earnings conference, the transformative change resulting from generative AI’s adoption is expected to unlock a multi-year, trillion-dollar upgrade cycle across global data center infrastructure, a tailwind that Nvidia has long been preparing and is ready to address.

Now, let me talk about the bigger picture and why the entire world’s data centers are moving towards accelerated computing. It’s been known for some time and you’ve heard me talk about it, that accelerated computing is a full stack problem, but it is full stack challenge, but if we could successfully do it in a large number of application domain has taken us 15 years…And what happened is, when generative AI came along, it triggered a killer app for this computing platform that’s been in preparation for some time…

The world’s $1 trillion data center is nearly populated entirely by CPUs today…In the future, it’s fairly clear now with this — with generative AI becoming the primary workload of most of the world’s data centers generating information, it is very clear now that — and the fact that accelerated computing is so energy efficient, that the budget of the data center will shift very dramatically towards accelerated computing and you’re seeing that now…you’re seeing the beginning of call it a 10-year transition to basically recycle or reclaim the world’s data centers and build it out as accelerated computing. You’ll have a pretty dramatic shift in the spend of the data center from traditional computing, and to accelerated computing with smart NICs, smart switches, of course, GPUs, and the workload is going to be predominantly generative AI.

Source: Nvidia F1Q24 Earnings Call Transcript

Specifically, the opportunity goes beyond just AI processors and software which provides segregated opportunities to sectors spanning hyperscalers, chipmakers, and software developers. Instead, it is a “full stack” challenge that includes re-engineering the software and “data center scale” problem in optimizing the computing system, which Nvidia is ready to comprehensively address given years of expertise in bettering its accelerated computing capabilities.

Nvidia’s continued commitment in honing its full stack hardware-software strategy is corroborated by its recent introduction of the “DGX H100”, which is a “fully integrated hardware and software” supercomputer built on the latest Hopper architecture optimized for AI applications. DGX H100 offers “6x more performance, 2x faster networking, and high-speed scalability” to address increasingly complex inference workloads like generative AI at optimal cost and energy efficiency without sacrificing performance. DGX can also be accessed off-premise via “DGX Cloud” offered through hyperscalers including Azure, Google Cloud Platform and Oracle Cloud (ORCL), expanding end-market access to incremental NVIDIA software and other solutions aimed at facilitating the development and deployment of generative AI technologies and other complex workloads with similar compute capacity demands.

The company has also recently unveiled the L4 Tensor Core GPU, L40 GPU, H100 NVL GPU, and Grace Hopper Superchip Architecture – all of which are inference platforms that have been optimized for AI applications, leveraging the “NVIDIA AI software stack”:

- L4 Tensor Core GPU: The data center GPU is powered by Nvidia’s latest “Ada Lovelace” architecture optimized for “professional graphics, video, AI, and compute”. Specifically the L4 GPU is designed to offer up to 2.5x better performance relative to previous generations in rendering “compute-intensive generative AI inference”, such as video streaming, recommendation systems and AI assistants, with “up to 99% better energy efficiency and lower total cost of ownership compared to traditional CPU-based infrastructure”. GCP is the first hyperscaler to implement the L4 platform via its G2 virtual machines for facilitating generative AI advances.

- L40 GPU: The L40 GPU is also based on the Ada Lovelace architecture and is designed to optimize performance for “visualization rendering, virtualization, simulation, and AI”. This includes workflows in NVIDIA Omniverse and other virtual environments, as well as “AI-enabled graphics, videos, and image generation”, facilitating transformation in content creation.

- H100 NVL GPU: The H100 NVL GPU is a variant of the H100 data center GPU optimized for deploying LLMs (e.g. ChatGPT). The AI-optimized data center processor is a “dual-GPU” connected by Nvidia’s “NVLink” technology. As discussed in a previous coverage, Nvidia NVLink enables “seamless, high-speed computation between every GPU” within a system to facilitate the compute demands of increasingly complex AI and high-performance computing workloads.

- Grace CPU and Hopper Superchip Architecture: The Grace CPU line-up includes the latest “Grace Hopper Superchip”, which “combines the Grace and Hopper architectures” deployed last year using the NVIDIA NVLink technology described above to address increased compute demands from AI and HPC applications, such as the deployment of LLMs. The latest development also complements the “Grace CPU Superchip” announced last year in supporting Nvidia’s expansive foray in data center processors.

Digging deeper into the software side, Nvidia offers three main software stacks in addressing AI workloads across different verticals and use cases:

- NVIDIA AI Foundations: This is a cloud service provided by Nvidia to enterprise users looking to build and deploy customized generative AI solutions. NVIDIA AI Foundations offers foundation models that can understand text (i.e. NVIDIA NeMo), visual imagery (i.e. NVIDIA Picasso), and biology language (i.e. NVIDIA BioNeMo), as well as a comprehensive suite of frameworks and APIs to facilitate the development of related generative AI solutions.

- NVIDIA AI Enterprise: NVIDIA AI Enterprise is a comprehensive suite of AI and data analytics tools designed for streamlining the development and deployment of advanced AI solutions such as “diagnostics in healthcare, smart factories for manufacturing, and fraud detection in financial services” for commercial customers. Similar to NVIDIA AI Foundations, AI Enterprise also provides a comprehensive suite of “frameworks, pre-trained models, and development tools” to optimize generative AI developments at the enterprise level.

- NVIDIA Omniverse: The virtual aspect of NVIDIA Omniverse is expected to play an increasingly critical role in facilitating AI training for applications across different verticals, like industrial and robotics. Specifically, NVIDIA has identified the Omniverse as a critical link in “aligning an AI for ethics” by facilitating “reinforcement learning from human feedback”.

Taken together, Nvidia’s full stack offering addresses the transformative demands from key verticals spanning cloud service providers, consumer internet companies, and enterprises ensuing from the advent of generative AI. The development continues to underscore Nvidia’s favourable prospects in capturing compute TAM expansion enabled by generative AI, which will likely become the “primary workload of most of the world’s data centers”.

Valuation Analysis

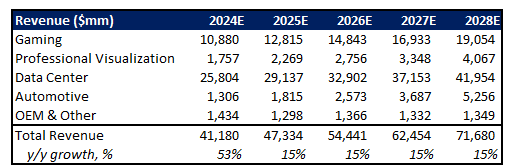

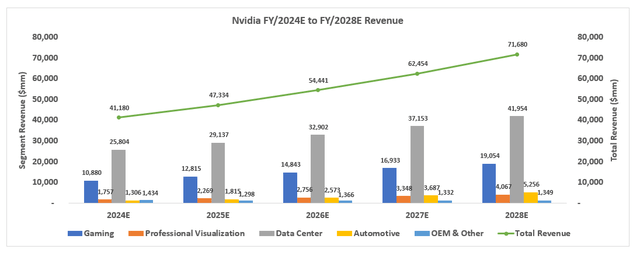

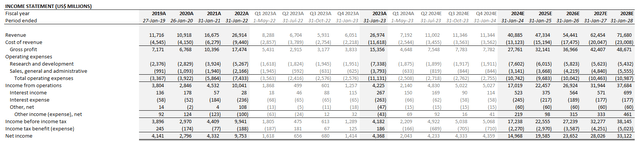

Nvidia management has guided fiscal second quarter revenue of $11 billion (+64% y/y; +53% q/q). This would imply close to 80% growth in data center sales based on the segment’s historical share of consolidated revenues, as well as expectations for continued tepid performance in the chipmaker’s other businesses which still face a challenged, though improved, demand environment in consumer-facing end-markets.

Author Author

And improved supply availability expected in the second half of the year will likely further Nvidia’s capitalization of existing demand momentum in data center sales, and reinforce prospects of outsized growth through fiscal 2024. Higher priced data center sales, alongside the accelerating adoption of complementary higher-margin software led by momentum in generative AI will also help to improve profitability at Nvidia, and offset near-term inflationary headwinds and other inefficiencies in its weaker segments amid the challenging macroeconomic environment.

Author

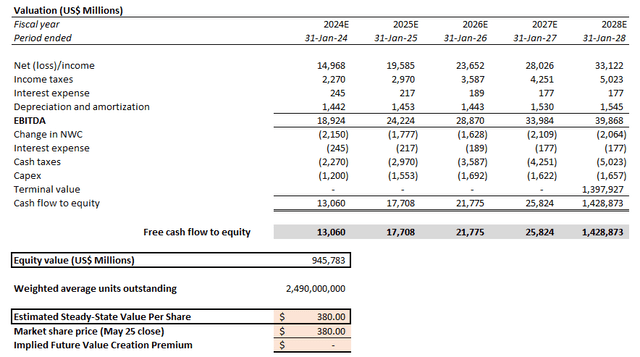

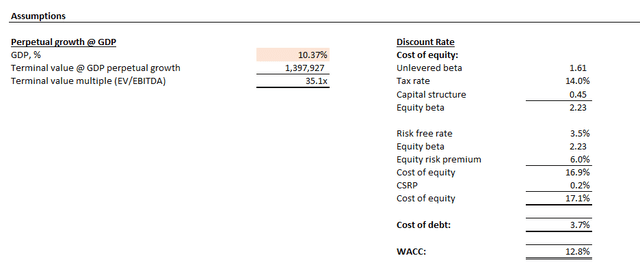

Based on the discounted cash flow analysis on projected cash flows taken in conjunction with our fundamental forecast for Nvidia, with application of a 12.6% WACC in line with the chipmaker’s capital structure and risk profile, the stock’s current price at about $380 apiece implies a more than 10% perpetual growth rate. This continues to represent a significant valuation premium relative to Nvidia’s peers as well as anticipated advancements in global economic growth.

Author Author

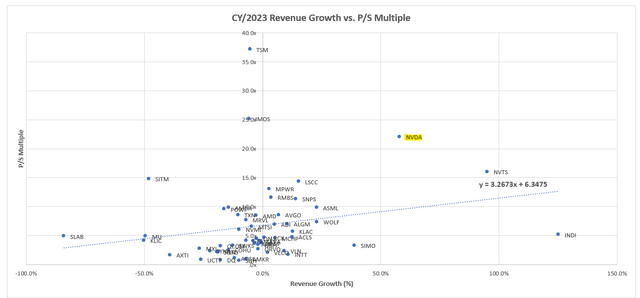

And considering a multiple-based valuation approach relative to its broader tech and semiconductor peers, Nvidia’s current market value at 40x estimated earnings (or 70x prior to the latest fundamental forecast adjustments for actual fiscal first quarter results and fiscal second quarter guidance) and 20x forward sales (or 25x prior to the latest fundamental forecast adjustments) also represents a substantial premium attributable to its fundamental outlook.

Author, with data from Seeking Alpha

The Bottom Line

While Nvidia’s mission critical role in enabling key next-generation technologies reinforces its long-term bullish thesis, the stock continues to trade at a lofty premium that makes it an expensive buy at current levels, especially considering regulatory and macroeconomic risks in the immediate-term. Despite Nvidia’s extensive reach in key computing capacities like Google, Amazon, Microsoft and Apple, which reinforces its prospects of achieving a $1+ trillion market valuation, there are still probable near-term downside risk that could neutralize the durability of the stock’s recent rally, making the risk-reward opportunity unfavourable at current levels.

Read the full article here